I went to an embedded binary world and back again!

Back in 20230706-binary-blog I wrote the backend for this blog in rust with images and posts embedded in the binary. As my first Rust project, it was incredibly messy, but it got the job done and I learned a lot! In this post, I’ll share how I built a more dynamic CMS to encourage writing!

But, after running it for a few months, anything to do with actually editing content began to feel really tedious. The CI pipeline in GitHub was slow due to rust compilation times and the cycle times for drafting posts and testing for broken links was a chore! Sure, I could have found ways to optimise this a bit, but it wouldn’t have made it easier to author content. And a blog after all is about writing content, not just about building the blog despite what most software engineering blogs would have you believe.

So, inspired by folks like fasterthanli.me, I set about moving completely away from the embedded binary and towards a more dynamic CMS (content management system) instead. The requirements were straight forward:

-

Store all images and markdown content in an object store. I first considered a peer-to-peer style mechanism like automerge.org/ between browser and server but after playing with it for a while it was much simpler to just have an object storage bucket. I’m the only one editing the content, and the offline editing opportunity would be rare anyway. While the loading performance1 is not as fast as static files in-memory or on local disk, the content can be aggressively cached, and the site is very horizontally scalable.

-

Support SVG and JPG/WEBP2 upload. With built-in down-scaling and thumbnail generation. I don’t want to have to care about this manually. Make it easy for me to embed images in as few clicks as possible.

-

Markdown to HTML rendering. I briefly looked a restructured text but couldn’t find a library with good enough support for it.

-

Content mutation. Automatically number headers and generate table of contents in the resulting HTML.

-

Validate. Render previews of unpublished draft posts, detect invalid markdown, detect broken image and post links, detect spelling and grammar errors.

1. The twin binaries of my blog Tatooine

I didn’t want to have to care about authentication or login to access an admin/editor view. So instead I’ve built out two binaries here. The “viewer” binary runs with read-only access to the object storage and with public HTTP ingress. While the “editor” binary runs with read-write access and runs inside my Tailscale network, only accessible by devices authenticated to it. Same code base, same data access layer.

Like the blog before it, this is hosted on my local Hensteeth cluster under my desk.

2. Object storage tree layout

Naïvely, an object storage structure for our images and markdown posts seems easy. Just stick the images in one tree and the post contents in another. Job done. No so fast! Metadata made this challenging since I needed to answer bulk queries efficiently.

For the index page, I needed to be able to list all the posts and display their titles, dates, labels, etc. If I needed to scan and read each Markdown document to extract this, I’d end up with a classic O(1 + N) problem and only using a fraction of the bytes I’d read from object storage. Instead, I store a zero-byte metadata item next to each post, with a file name which is base-64 encoded postcard structure.

/posts/{my-post-slug}/content.md

/posts/{my-post-slug}/props/{BASE64 encoded serialized properties}

This means that with one request I can list all these props files from the object store, deserialize them, and return a list of all the posts in date order.

An added complexity was that I wanted to add labels to the posts. But labels can be quite long and most object stores have a limit to the file name length. Adding an arbitrary number of these labels and then base64 encoding them would quickly start eating into the file name limit. So I break those out into separate zero-byte items in the list as well.

/posts/{my-post-slug}/content.md

/posts/{my-post-slug}/labels/{label-one}

/posts/{my-post-slug}/labels/{label-two}

The editor has to do some fairly complex gymnastics to delete and replace the props file and remove or add any changes to the labels. But that’s easy enough.

Images easier, but have the problem of needing to store multiple variants of the same image. I tried a few options, but ended up with a duplicate slug:

/images/{my-image-slug}.{ext}/{my-image-slug}.{ext}

/images/{my-image-slug}.{ext}/{my-image-slug}.{variant-ext}

/images/{my-image-slug}.{ext}/{my-image-slug}.{other-variant-ext}

3. HTMX

While not essential here, HTMX is a technology I’ve wanted more experience with for future UI work. It enables server-side snippet rendering with minimal network requests when users navigate between pages. It also degrades to non-JavaScript very well and full refreshes are handled well. I’ve built this whole site with just the <body hx-boost="true"> parameter.

The backend for this was a bit tricky as I have to inspect each incoming request for the HX-Request header and return either the entire page or just the requested inner content. Most views are rendered wrapped in a function to do this:

render_body_html_or_htmx(

StatusCode::OK,

"Ben's Blog",

html! {

main.container {

...

}

},

render_body_html,

htmx_context,

).into_response()

That html! macro is from maud.lambda.xyz. It’s great for ensuring any HTML is valid at compile time.

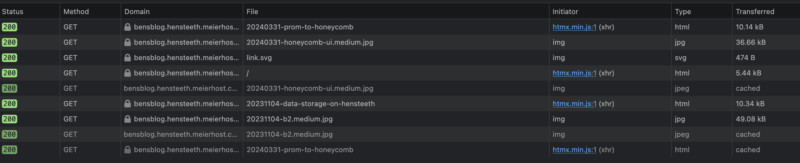

Switching pages through the blog emits just a single network request for a snippet of HTML to change, caches well on the frontend, and supports a history stack so navigating back and forwards can use this cache.

4. Image scaling on upload

I didn’t want to have to manually rescale screenshots and uploaded images or convert them from PNG to JPEG/WEBP. Why can’t the CMS do this for me?

So uploading a JPEG/PNG/WEBP/BMP is first converted to lossless WEBP, and then to a medium resolution JPEG for embedding in the blog, and a thumbnail that’s useful in the CMS editor. This is all done using the Rust image crate.

The SVGs are pretty small anyway and don’t get resized. These are great for technical diagrams. I use xmlparser to check that the SVG content is valid XML.

The file sizes are generally excellent. Most of the medium resolution JPEGs are only a few tens of kilobytes.

5. Markdown modification and validation on render

I use the pulldown-cmark library to parse and convert Markdown to HTML. One of the neat things this does is work as a “pull” parser. A pull parser operates like an iterator, and you can always add intercept or filter stages to this iterator. This means that you can add additional stages to modify the emitted HTML or check it as we go.

The stages I’ve added are:

- For any

imgandatag’s emitted that have a relative link (nothttp/s) check that they are in the set of known images and posts in the CMS. - For any HTML header elements, keep track of the nesting and number each heading like

1.,2.1., etc. Wrap them in anaanchor tag to allow linking to specific sections and to support the generated table of contents which gets inserted at the top of the rendered content.

So posts get a nice little table of contents at the top and the headers are numbered and linked with a focus style on hover.

6. Spell checking

I like having some basic spelling and grammar checking when I’m writing posts. I first tried by just adding lang="en" spellcheck="true" to the <textarea> elements. But this doesn’t really work or get respected in the browsers that I use, so I moved on.

The second idea was to use the zspell crate to run a basic Hunspell style check when the post is submitted and return some hints on invalid words and suggestions. However, this is a slow iteration process, and it would be relatively complex to match the errors to words in the <textarea>. That’s far more complex than I wanted.

Finally, I looked at a browser extension instead. I used to use Grammarly, but stopped since I was uncomfortable with the privacy and account pricing. Then I found LanguageTool which provides an open source checker including in-browser personal dictionary. I installed this on my Hensteeth cluster within the Tailnet and configured the browser to hit that.

So it’s not built into the blog itself, but actually providing more value since I can use it across any sites that have text areas.

7. Other bits and pieces

- Tracing and logs go to Honeycomb.io.

- Caching headers are applied to the images and HTML pages with Etag calculations.

- Some small SVGs for favicons, social, and link icons are embedded into the binary as static data.

8. What remains

Just. Write. Content.

And some development continues in github.com/astromechza/bloog

- Better in-process caching to reduce the effect of the object storage read latency

- Add missing

<meta ..>tags used for social media previews - Robots.txt to set a scraping/training-data policy

- An embedded profile image

Usually around 300ms from the Hensteeth cluster to the Backblaze B23 data centers in the US. I could move everything over to a GCS or S3 bucket in London or move my Backblaze account to Europe. Or just cache stuff better. Currently going with the latter until anyone actually cares.

Why WEBP? It’s technically more efficient for lossless image encoding than PNG. Unfortunately the Rust image crate doesn’t support lossy WEBP encoding, so I ended up using JPEG for the lower resolutions.

I use Backblaze here for historic reasons, it’s where all my photos, documents, and other data is backed up. So I just used that for the blog storage too.